0161

Mark J.P. Kerrigana*, Simon Walkera, Mark Gambleb, Sian Lindsayc, Kate Readerd, Maria-Christiana Papaefthimioue, Loretta Newman-Fordf, Mark Clementsg, Gunter Saundersh

aEducational Development Unit, University of Greenwich, London, UK; bCentre for Learning Excellence, University of Bedfordshire, UK; cLearning Development Centre, City University, London, UK; dSchools of Arts and Social Sciences, City University, London, UK; eCentre for the Development of Teaching and Learning, University of Reading, Reading, UK; fLearning and Teaching Development Unit, UWIC, Cardiff, UK; gSchool of Life Sciences, University of Westminster, London, UK; hWestminster Exchange, University of Westminster, London, UK

Abstract

The Making Assessment Count (MAC) project started at the University of Westminster in 2008. It sought to align staff and student expectations of feedback and support greater use of feed-forward approaches. A baseline analysis of staff views in the School of Life Sciences suggested that students did not make strategic use of the feedback they received. A similar analysis of the student position revealed that as a group they felt that the feedback provided to them was often insufficiently helpful. To address this dichotomy, a MAC process was developed in the School of Life Sciences and trialled with a cohort of about 350 first year undergraduate students. The process was based on a student-centred, three-stage model of feedback: Subject specific, Operational and Strategic (SOS model). The student uses the subject tutor's feedback on an assignment to complete an online self-review questionnaire delivered by a simple tool. The student answers are processed by a web application called e-Reflect to generate a further feedback report. Contained within this report are personalised graphical representations of performance, time management, satisfaction and other operational feedback designed to help the student reflect on their approach to preparation and completion of future work. The student then writes in an online learning journal, which is shared with their personal tutor to support the personal tutorial process and the student's own development plan (PDP). Since the initial development and implementation of the MAC process within Life Sciences at Westminster, a consortium of universities has worked together to maximise the benefits of the project outcomes and collaboratively explore how the SOS model and e-Reflect can be exploited in different institutional and subject contexts. This paper presents and discusses an evaluation of the use of the MAC process within Life Sciences at Westminster from both staff and student perspective. In addition, the paper will show how the consortium is working to develop a number of scenarios for utilisation of the process as a whole as well as the key individual process components, the SOS model and e-Reflect.

Keywords: assessment; consortium; coursework; efficiency; eReflect; exam; feedback; feedforward; JISC; MAC; online; PDP; peer; reflection; SOS model; strategy; tutor; VLE

*Corresponding author. Email: M.J.P.Kerrigan@greenwich.ac.uk

(Received 12 June 2011; final version received 30 June 2011; Published: 31 August 2011)

ISBN 978-91-977071-4-5 (print), 978-91-977071-5-2 (online)

2011 Association for Learning Technology. © M.J.P. Kerrigan et al. This is an Open Access article distributed under the terms of the Creative Commons Attribution-NonCommercial-NoDerivs 2.0 UK: England & Wales licence (http://creativecommons.org/licenses/by-nc-nd/2.0/uk/) permitting all non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

DOI: 10.3402/rlt.v19s1/7782

In recent years, the university sector has focussed attention and resource on feedback, not directly because of its centrality in the learning process, but because it has been consistently flagged as a problem area by the national student survey (NSS). Students complain about ineffective feedback via the NSS but staff also have reason to be disgruntled. Many spend considerable time marking work and providing valuable feedback only to see piles of uncollected assignments suggesting disinterest and disillusionment amongst the students (Winter and Dye 2005). Many staff strongly believe that students only pay attention to the mark they receive and make little attempt to engage with their feedback (Wotjas 1998; Mutch 2003). Some research has suggested that withholding the mark can lead to greater engagement by students with their feedback (Carless 2006) and an increase in the value added by the feedback (Nichol 2007).

Assessment feedback has the potential to enhance achievement but only if the right balance can be struck between measuring performance and shaping and developing the individual (Gibbs and Simpson 2004). It is accepted that the balance between assessment of learning and for learning has leaned too much towards the former, driven by the need to measure student performance. Such emphasis, driven to some extent by greater numbers of students and modularisation, leads to bunching of assessments (Price and O'Donovan 2008) and less opportunity for students to derive benefit from ‘practice’ application of knowledge and ideas with feedback often coming too late for it to make much difference to their performance (Higgins, Hartley, and Skelton 2002).

Given the problems with formative assessments, it is imperative that feedback provided on marked work is sufficiently well crafted to help the student move forward. The feedback, irrespective of delivery, also needs to be linked to the processes that provide an opportunity for the student to analyse and make use of the feedback in what is now termed a ‘feed-forward approach’ (Hounsell, Xu, and Tai 2007). One missed avenue for feed-forward on assessed coursework is the personal tutor as they are often out of the loop on their tutee's performance until it is too late for them to make a difference in terms of ensuring that feedback is acted upon and leads to a definitive plan for improvement. This apparent decline in effectiveness can be linked to higher numbers of students and modularisation.

Many universities have responded to the need to fundamentally revisit the feedback process; from delivery through to action, by making more use of technology; for example, online marking and coursework return to increase speed. This should enhance the likelihood that a student utilise the feedback received in their next similar assignment (Denton et al. 2008). In addition, technology allows for feedback to be returned in different ways, and there is emerging evidence that suggests alternatives to written feedback can lead to better formative feedback experiences (Macgregor, Spiers, and Taylor 2011). At Sheffield Hallam University a system integral to the institutional virtual learning environment links the electronic release of marks to action on the part of the student. Here students do not see their grade until they have looked at the electronic feedback that has been provided and have had the opportunity to write a reflective entry into the system (Hepplestone 2010). However, there is the potential, given the NSS driver, that the use of technology could fuel superficial tick box approaches that may satisfy the customer but do little better to guarantee genuine personal development and improvement.

Technology may make a quality difference to feedback where it is part of a process that has at its heart the facilitation of meaningful interaction between students and their tutors, either online, face to face or both. In this paper, a process [the Making Assessment Count (MAC) process] designed to facilitate a dialogue that connects the feedback the student receives on their work, through their reflection, to the support and guidance of their tutor will be described and evaluated. The consequences of the process on students and staff will be discussed. In addition the paper will show how it is becoming possible for other universities and different subject areas to adapt the process to suit their own needs and priorities by working together as part of a strategic consortium.

Data from our baseline activities demonstrated that the feedback staff provided was deemed useful, yet students wanted more as they felt that would enhance their learning (Kerrigan et al. 2009). Conversely, there was a significant miss-alignment between student actions and their perceptions by staff in relation to how students use feedback and the value they place on the written comments. To address these core issues, a process, termed MAC, was developed to enhance the amount of feedback students receive, demonstrate action on feedback to staff and enhance communication between students and their tutors. The summation of these ideas developed into a new model of student feedback into what can be defined as the SOS model of a tripartite feedback; Subject, Operational and Strategic (Figure 1).

Figure 1.

The SOS Model of student feedback.

The feedback on the students’ returned work mostly focusses on material aligned to academic performance and the development of subject matter and skills, indicating a level of performance, suggesting improvements and highlighting good achievement.

After collecting their assignment and reading their subject feedback, students complete an online self-review questionnaire. This requires close engagement with the feedback they have been given and focusses on both the process and outcomes of learning–for example, students have to indicate how long they spent on the assignment, whether the guidance they had been given was appropriate and how well they understood the feedback they had received. Their responses are then processed, server side, and personalised reports sent to students instantaneously via email. Importantly, the report also contains a graphical representation of the student's performance on all assignment completed. The online system used to complete the online questionnaires and produce the reports is known as e-Reflect.

In the final step of the SOS model, students use the Operational report and their Subject feedback as a prompt to write short reflective entries, focussing on actions they believe they need to take to improve, in an online learning journal that is shared with their personal tutor. Personal tutors can comment on and extend the reflections in the learning journals and suggest further strategic action. Once this stage is completed, both tutors and students can take better advantage of their face-to-face contact time: students enter the tutorial better able to articulate their difficulties, while tutors, who can refer to their tutees’ learning journals both before and during the tutorial, are better prepared to give appropriate support and guidance.

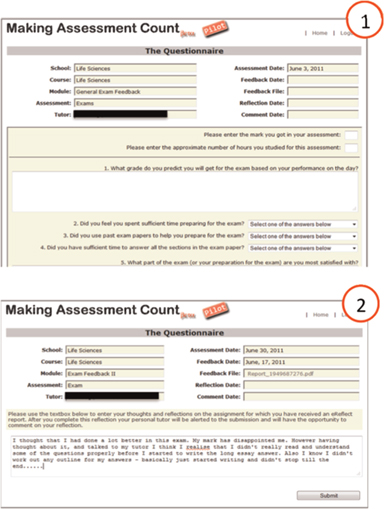

The e-Reflect tool is an integral component of the MAC process (step 2), helping to encourage the student to think about their feedback and approaches to study, as well as providing additional operational feedback. Importantly, e-Reflect also serves as a ‘bridge’ between the student's assignment feedback and their personal tutor. During the initial pilot phase, e-Reflect 1.0 was built using Excel Macros and then for the larger-scale rollout, e-Reflect 2.0 was developed with central computing services that linked to the student records system (via RSS), enabling more effective processing and report generation. As more institutions become interested in adopting e-Reflect, version Reflect 3.0 was developed as a freestanding open source tool (Figure 2). In e-Reflect 3.2 (current version), questionnaire authoring by staff, questionnaire completion by students, report generation and storage and updating/sharing of the learning journal is all completed within a single system. There is also a system of alerts via email, which communicate to staff when a tutee has completed a reflection and to students when their tutor has commented on their journal.

Figure 2.

Screenshots of the MAC tool. (1) The customisable online questionnaire students are asked to complete and (2) the area where they enter their reflective log.

The MAC process was trialled on a large scale involving 380 undergraduates and 35 staff in the School of Life Sciences and subsequently evaluated by questionnaire and face-to-face interviews. This school was chosen as two members of the project team were active lecturers in it and had access to large numbers of students. It is important to note that the MAC process is not discipline specific and is easily transferable to any subject. Both staff and students experiences were explored and analysed.

Prior to the face-to-face interviews, a questionnaire was offered to the entire cohort and completed by 65 students. This disappointing response was attributed to the timing of the questionnaire: it coincided with the end of term. To increase our understanding of the student experience a second evaluation is planned at the beginning of the new academic year aimed at the same initial cohort. Analysis largely reveals a picture of positive student views of the MAC process. A majority of respondents indicated that they had used e-Reflect either because it gave them extra feedback, helping to realise mistakes and prepare for other assignments, or because they thought that it was a way to improve and keep track of their progress. About 30% of respondents thought that using e-Reflect had helped them to build a better relationship/communicate more with their tutor. Conversely, about 10% said the process was time consuming and like an extra assignment. This response was surprising: the online questionnaire only took a few minutes to complete and the online learning journal only required a paragraph of text. A review of the student responses indicated that in some cases they were writing large reflections and thus spending too long on the process. To address this, students were supported on how to complete the learning journal. Furthermore, in one instance the completion of e-Reflect was linked to a grade and so viewed by some as an assignment–following a review it was decided that this was not the best embedding of the process and a more student-centred, participatory approach was adopted instead. A majority of the respondents answered yes to the question ‘Did using e-Reflect help you to do any more of the things you feel you should do when you get a piece of marked coursework returned with feedback’?

It should be noted that only a small proportion of students who did not engage with MAC at all completed the questionnaire. It has to date proved impossible to gather together significant such students to elicit more on the basis of their lack of engagement. There is anecdotal evidence from academic staff that it was not just the high achieving students that engaged, although equally many staff felt that it was likely that the majority of the ‘non-engagers’ would be those students needing help and support most. This is borne out by the fact that of the set of students in the undergraduate cohort who did not progress, hardly any of them had engaged at all with e-Reflect.

Whilst the questionnaire data provided a good empirical base, the data from the face-to-face interviews highlighted a variety of straightforward benefits. Excerpts are shown in Table 1. The students who took part in the face-to-face interviews were drawn from those who completed the initial questionnaire.

|

Not everything that students have said was positive. However, consistent amongst students was the view that the MAC process was only going to be ‘really good’ or ‘make a difference in the long run’ if the feedback received on work was understandable and if personal tutors regularly commented on students’ learning journals and spoke to students about their feedback. This notion that students are now able to give feedback to their tutor about the quality and ‘fitness-for-purpose’ of their feedback has raised some interesting questions and prompted useful actions. Comments around these two themes of the quality of feedback and tutor engagement with the MAC process are shown in Table 2.

|

All of the students who were interviewed and recorded had volunteered so to do in response to an email request. It is therefore possible that our sample of interviews is biassed towards students who are perhaps the ‘high achievers’, the more ‘aspirational’ or the more proactive and engaging. Whilst this cannot be ruled out, it was the case that none of the students interviewed were shy about providing negative feedback about the process. Across the 11 interviewed, 8 achieved ≥60% marks on average and 3 achieved ≥40% marks to <60%. When comparing the overall view of e-Reflect and its real/potential value there was no difference between the groups thus suggested that academic performance was not the sole determinant in their perception.

Generally academic staff were not as positive about the MAC process. A minority (1 out of 11 interviewed) thought that MAC was of no use whatsoever whilst three others agreed that ‘it clearly affected some students in a positive way’. The rest of the staff interviewed held a view somewhere in between, feeling that any initiative to highlight to students the need to pay attention to their feedback was good. Although almost all staff could see the potential of the MAC process and thought that it was something worth developing further, a number of significant issues and problems were raised. These ranged from not having the time to engage fully, through to significant doubts that the process would help the ‘weaker’ student.

A questionnaire sent to staff also highlighted the degree to which staff see potential for the MAC process with 10 out of 12 respondents agreeing that it is ‘very good in principle’. However 33% highlighted a lack of student engagement as a problem whilst 25% of respondents highlighted the value of the MAC process for monitoring student progress. Encouragingly, over half of the staff respondents thought that the MAC process had improved active dialogue between students and staff over student's work and development and around 40% thought that the MAC process had impacted on the way in which they tutored and/or provided feedback to students (Table 3). Of note, two staff also stated that implementation of the MAC process had impacted on the way that they approached provision of feedback. Another member of staff changed the way he instructed his teaching team to deliver coursework feedback influencing 12 members of academic staff, some of whom were not directly engaged with MAC. Collectively this course team agreed to focus more on providing students with ‘action points’ that they should consider in order to improve.

|

As part of the larger evaluation and support for future design, staff were asked how they deliver support on feedback and students were asked who they talk to about their coursework. This resulted in some interesting data linked to the use of the MAC process and highlighting a potential miss-alignment in activity. Students appear to be willing to discuss their feedback with their personal tutor as well as the person who marked their work and indeed with a ‘third party’ member of staff who they like (Figure 3). This suggests that the model of a tutor supporting action on feedback is valid and highlights that a professional relationship is important in this process. Interestingly, students seek more than one source of support; this may not always include the marker or their personal tutor. Whilst it is well known that peer feedback is important, the ability for students to comment and suggest actions on their peers’ feedback is an interesting extension. Indeed, one could build this into the MAC process and permit students to select with whom their feedback is shared. Furthermore, these data show that feedback on a script is often shared with more than one audience: this should be considered when feedback is constructed.

Figure 3.

The students’ sharing of feedback.

As these data suggest that students are engaging with staff about their feedback, we then asked staff how they are being contacted (Figure 4). Those who responded with either agree or strongly agree were grouped as positive, neither agree nor disagree as neutral and disagree or strongly disagree as negative. Interestingly, there did not appear to be a dominant method by which the students contacted staff for support with their feedback. There was a slight preference towards support in class, which would align with Figure 3 but the number of staff who gave a neutral response prevent this from being conclusive. These data do suggest that students are willing to accept additional feedback support by email and that some staff provide this.

Figure 4.

How staff are contacted by student to provide feedback support.

Finally, we then asked staff how many students contacted them about the last piece of marked work they had returned (Figure 5). Whilst there were a few instances wherein all students contacted the member of staff, in the main most staff indicated that 10% or under of students had contacted them. Whilst initially this could appear to be of concern, the data from Figure 3 suggest that 43% of the feedback support students engage with is from peers and 56% from academics of which 10% may not be related to the piece of work. It could be argued therefore that under 1% of respondents took no action on their feedback.

Figure 5.

Analysis of students who contacted staff for support.

Following the successes of the original MAC project, a number of other universities [specifically Bedfordshire, City University London, Greenwich, Reading and University of Wales Institute Cardiff (UWIC)] have been exploring how best to make use of the project outputs. This has included consideration of the MAC process as a whole, the SOS model and how best to utilise the e-Reflect tool. The notion of working with ‘competitors’ is complex as each institution within the consortium draws from the same student pools but importantly, we have realised that the learning and support form working together is significant. With each institution developing a different ‘flavour’ of MAC, building on their own interests/expertise, and running a pilot with associated evaluation, everyone will benefit from the future findings (Figure 6).

Figure 6.

Enhancement of the MAC process by working as a consortium.

At UWIC the MAC process was piloted on an essay assignment with 50 first year undergraduate students on the BSc Sports Science programme. Contextual differences in the self-review questionnaire including whether students had made a plan for their essay before starting, whether they thought the mark received was as high as they could have achieved and what they plan to do next with the assignment gave a novel approach for MAC. A focus group of students at UWIC were generally extremely positive about the experience. They all said that they had benefited from the experience of using the MAC model, including the e-Reflect questionnaire and journal. When asked how they had benefited, most responded that it had made them think deeply about their own strengths and weaknesses, the ways in which they approached their assessment task, areas for future development and the usefulness of tutor feedback.

At City University London there is strong emphasis on engaging students with the feedback that they receive from their tutors as evidenced in the International Politics department that has a well-developed face-to-face personal tutorial scheme for students. As a consequence, the International Politics department plans to use an adapted form of the MAC process (academic year 2011–2012) to help link the students’ work with the face-to-face tutorial meetings. As in the original MAC model, students will complete an online self-review questionnaire, however, staff will not be commenting online on students’ reflections but instead students will book a face-to face-tutorial using an online Moodle scheduler. To achieve this, the Educational Support Team has built the MAC process into Moodle (Table 4). The student's reflection on their work will inform the tutorial.

|

At the University of Reading, the e-Reflect tool will be used at various stages of a year-long research project to assist undergraduate students. This novel approach of using MAC to track and support a substantive piece of coursework is an exciting development. Students will be asked to reflect on the preparation of the literature review for their research project immediately after the submission deadline and again after the return of the assessed review with appropriate feedback generated soon after their reflection. Also, students will reflect on their performance at the end of the laboratory work or data collection period and again feedback will be generated.

At the University of Westminster, the School of Life Sciences has adapted the original MAC process so that it can be used to facilitate feedback on written examinations; this is a two-stage process. This use of the MAC process addresses the continuous concern that students do not receive feedback on exams. The first stage requires students to complete a questionnaire on an exam they have just taken that prompts the student to predict the grade they expect for the exam. It asks several questions about the way the student prepared for and answered the exam paper. The automated report derived from questionnaire completion provides tips on how to improve their future exam performance based on the responses they gave. Students then go on to write a reflection in their learning journal. The second stage of the process is initiated one month later (once all exam papers have been marked and approved by the exam board) at which point students are provided with an opportunity to see one of their exam papers annotated with written feedback. Students then complete a second e-Reflect questionnaire about their performance comparing the grade they predicted with their actual grade. They are sent a second automated report with suggestions of areas for further reflection after which they complete a second entry in their learning journal. This is shared with their personal tutor who is able to comment.

Finally, at the University of Greenwich the MAC process is currently being adapted to support staff development by integrating it with a postgraduate teaching and learning course. By substituting the coursework element with objectives and skills this will permit a reflective approach whilst promoting a student-centric strategy for enhancement around teaching and learning. At the University of Bedfordshire there are plans to use the e-Reflect tool to help international students to adapt to studying in the UK. Reflective questionnaires will be used iteratively in an attempt to support students more in the critical early stages of their taught programme.

There is strong evidence that the MAC Process can help some students engage with, and make more of their feedback. It seems that a straightforward technology (e-Reflect) can be used to encourage students to think more about their feedback. Importantly, the introduction of the technology can potentially change the nature of a face-to-face tutorial system to focus the tutee/tutor relationship more on academic performance as well as influence how academic staff approach delivering feedback. These three connected transformations are of clear significance and, provided that groups of staff are convinced of the payback, and that MAC or some ‘flavour’ can be readily integrated readily into support mechanisms, there is every possibility that students will benefit. The consortium strategy has enhanced the development and realisation of the MAC process by a trial in a second Virtual Learning Environment (VLE) as well as developing Moodle blocks, links to UG research and exam feedback–all significant enhancements that would not have been achievable by a single institution. However, variations in delivery of the approach are emerging, as are uses of a MAC process linked explicitly to personal development planning and employability.

The MAC process has strong potential to support students in their understanding and acting on feedback, as well as being a catalyst for enhancing student–tutor academic relationships. Furthermore, with increasing pressures on staff and student time, a tool that can enhance the effectiveness of face-to-face tutorials as well as support learning through reflection, could be a welcome addition to an institution's technology enhanced learning strategy. By working as part of an effective consortium, the development of the MAC process has been significantly enhanced, maximising the benefits of a project for those institutions involved thereby increasing sustainability and dissemination within the sector.

Information on the MAC project can be found at: http://www.makingassessmentcount.ac.uk

The MAC project and the subsequent benefits realisation project were funded by Joint Information Systems Committee (JISC) as part of the Curriculum Delivery programme. http://www.jisc.ac.uk/whatwedo/programmes/elearning/curriculumdelivery.aspx

Carless, D. 2006. Differing perceptions in the feedback process. Studies in Higher Education 21, no. 2: 219–33. [Crossref]

Denton, P., J. Madden, M. Roberts, and P. Rowe. 2008. Students’ response to traditional and computer-assisted formative feedback: A comparative case study. British Journal of Educational Technology 39, no. 3: 486–500. [Crossref]

Gibbs, G., and C. Simpson. 2004. Conditions under which assessment supports students’ learning. Learning and Teaching in Higher Education 1: 3–31.

Hepplestone, S. 2010. Technology, feedback action! A short report. https://files.pbworks.com/download/MA1253u15k/evidencenet/23202481/guide%20for%20senior%20managers%20FINAL.pdf (accessed June 10, 2011)

Higgins, R., P. Hartley, and A. Skelton. 2002. The conscientious consumer; reconsidering the role of assessment feedback in student learning. Studies in Higher Education 27, no. 1: 53–64. [Crossref]

Hounsell, D., R. Xu, and C.M. Tai. 2007. Monitoring students’ experiences of assessment. (Scottish Enhancement Themes: Guides to Integrative Assessment, no.1). Gloucester: Quality Assurance Agency for Higher Education. http://www.enhancementthemes.ac.uk/publications/ (accessed June 10, 2011)

Kerrigan, M.J.P., M. Clements, A. Bond, and G. Saunders. 2009. eReflect-Making Assessment Count. Proceedings of the Fourth International Blended Learning Conference, June 2009 in University of Hertfordshire. ISBN: 978-1-905313-66-2, 219–32.

Macgregor, G., A. Spiers, and C. Taylor. 2011. Exploratory evaluation of audio email technology in formative assessment feedback. Research in Learning Technology 19, no. 1: 39–59. [Crossref]

Mutch, A. 2003. Exploring the practice of feedback to students. Active Learning in Higher Education 4, no. 24 24–38. [Crossref]

Nichol, D. 2007. Improving assessment after the task. Re-Engineering Assessment Practices in Scottish Education (REAP) Online Resources. http://www.reap.ac.uk/nss/nssAfter02.html (accessed July 25, 2011)

Price, M., and B. O'Donovan. 2008. Feedback–All that effort, but what is the effect? Paper presented at EARLI/Northumbria Assessment Conference, August 27–29, Seminaris Seehotel, Potsdam, Germany.

Winter, C., and V.L. Dye. 2005. An investigation into the reasons why students do not collect marked assignments and the accompanying feedback. http://wlv.openrepository.com/wlv/bitstream/2436/3780/1/An%20investigation%20pgs%20133-141.pdf (accessed July 25, 2011)

Wojtas, O. 1998. Feedback? No, just give us the answers. Times Higher Education Supplement September 25: 7.